Why I Use NGINX with HAProxy in Production

Overview:

In this post, I will share the key reasons why I use NGINX open source and the HAProxy community edition in production environments.

Page Contents

Foreword

NGINX and HAProxy are open source popular, robust, reliable, scalable, high-performance load balancers and reverse proxy software for HTTP and TCP/UDP applications. With the rise in adoption of small, independent, and reusable microservices and modular monolith architecture for modern software development, powered by APIs, both software can also be deployed as an API gateway. APIs can also be implemented in monolithic applications.

However, NGINX has additional capabilities; it can function as a web server to serve HTTP/application server frameworks such as PHP, FastCGI, SCGI, uwsgi, and memory-cache servers such as memcached. Besides, NGINX can also reliably serve static content from frontend applications such as React web apps and much more.

Because both NGINX and HAProxy have an open source (or community or freely available edition) and commercial (subscription-based) edition. From a personal experience viewpoint, I will purely focus on the freely available editions, which I have relied on, in testing/staging and production environments.

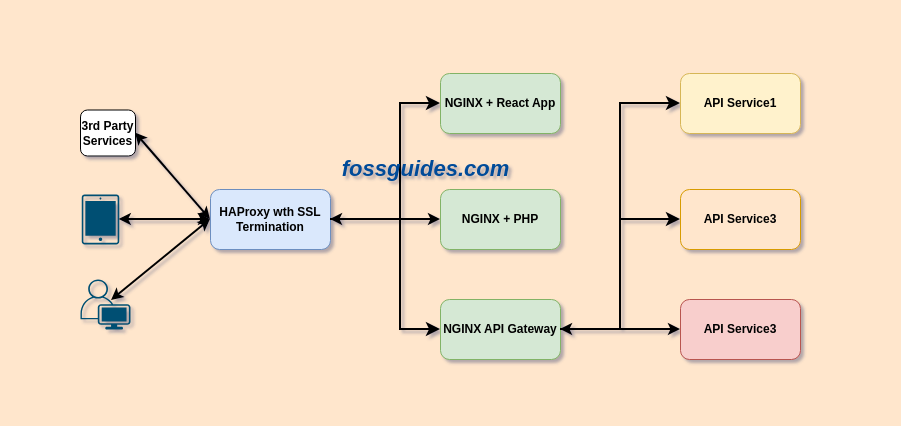

For this guide, I have considered the scenario illustrated in the following image. Here, HAProxy is an “entryxit service” (a name I coined for the role of HAProxy in our organization’s IT environment) and load balancer, and NGINX is a web server, reverse proxy, API gateway, and load balancer.

HAProxy offers a much simpler implementation for SSL/TLS Termination

When it comes to SSL/TLS termination or setting HTTPS for your websites and applications, HAProxy is the better option. The HAProxy load balancer provides high-performance SSL termination, allowing you to encrypt and decrypt traffic for your websites and applications.

HAProxy enables you to quickly and easily enable SSL/TLS encryption for your applications by using HAProxy SSL termination.

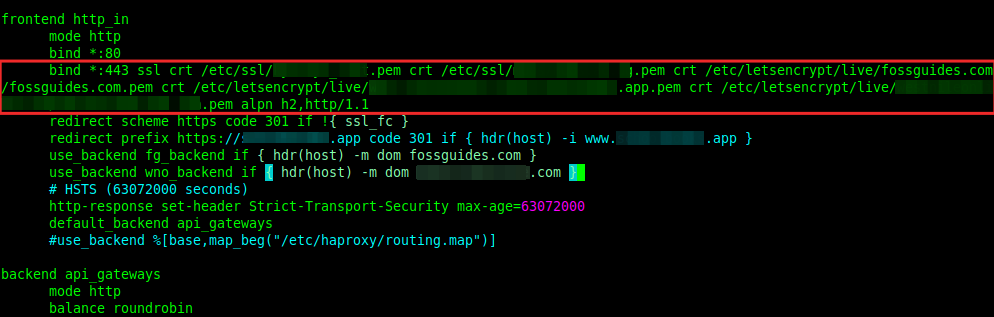

Consider a scenario where you have to manage several domains and sub-domains in your IT environment and for each of them, you have to configure SSL. In HAProxy, you can archive this with one line of configuration as shown in the following image.

To enable SSL termination in HAProxy, use the add the ssl and crt parameters to a bind listener in a frontend section. For multiple certificates, use multiple crt parameters as shown in the image above. Below is a sample configuration:

.... bind *:443 ssl crt /etc/ssl/example.com.pem crt /etc/ssl/example2.com.pem crt /etc/letsencrypt/live/fossguides.com/fossguides.com.pem crt /etc/letsencrypt/live/testapp.app/testapp.app.pem crt /etc/letsencrypt/live/testsite.com/testsite.com.pem alpn h2,http/1.1 ....

Benefits of using HAProxy for SSL termination

- HAProxy offers high-performance SSL termination,

- It allows you to maintain multiple certificates in a single or fewer places, making your job easier,

- It relieves your web servers from the burden of processing encrypted messages, freeing up CPU time,

- It prevents your web servers from being exposed to the Internet for certificate renewal purposes, and

- It is incredibly resource-efficient.

Note: By using the SNI header, HAProxy can also let you proxy 443 to backend servers without terminating, this is useful depending on your environment requirements and setup.

Although NGINX can perform SSL termination, it requires each server block to be configured with an SSL certificate and key and other relevant SSL configurations. In an IT environment with many domains/sub-domains to manage, using NGINX for SSL termination becomes hectic. So I always have to use HAProxy as a load balancer and SSL offloader.

NGINX is a web server at its core

NGINX provides web server capabilities at its core while HAProxy does not, it’s simply a load balancer and reverse proxy. NGINX can be deployed to serve static content and also forward traffic to application servers for PHP, Python, Java, Ruby, and other languages. It can also be used to deliver media (audio and video), integrate with authentication and security systems, and much more.

NGINX ships with several web server features such as virtual server multi-tenancy, URI and response rewriting, compression and decompression, variables, error handling, and more.

Benefits of using NGINX as a web server

NGINX can be deployed:

- to server websites or applications using a virtual server (what is commonly known as virtual hosts in Apache or HTTPD),

- to serve static files from frontend applications such as React,

- to pass requests to proxied servers for HTTP, TCP/UDP, and other protocols, with support for modifying request headers and fine-tuned buffering of responses,

- compress server responses, or decompress them for clients that don’t support compression, to improve delivery speed reduce overhead on the server and so much more.

In NGINX, each virtual server for HTTP traffic defines special configuration instances called locations that control the processing of specific sets of URIs. Each location is defined with its configurations to determine what happens to requests and responses that are mapped to this location.

As a reverse proxy, NGINX can proxy requests to an HTTP server (another NGINX server or any other server such as Apache) or a non-HTTP server running an application developed with a specific programming language such as Python or PHP, using a specified protocol. NGINX supports protocols such as FastCGI, uwsgi, SCGI, and Memcached.

Here is a sample NGINX configuration for serving a React application with a Nodejs API service:

upstream api_svc_cluster {

server 10.1.1.10:9090;

server 10.1.1.10:9090;

server 10.1.1.10:9090;

}

server {

listen 8080;

server_name www.fossguidestest.app fossguidestest.app;

root /var/www/apps/fossguidestest/build;

index index.html;

#load_module modules/ngx_http_modsecurity_module.so;

include snippets/error-page.conf;

client_max_body_size 10M;

location / {

try_files $uri $uri/ /index.html =404 =403 =500;

}

location /api {

proxy_pass http://api_svc_cluster;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location /assets {

proxy_pass http://api_svc_cluster;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

}

Because HAProxy is only intended as a load balancer, I always have to use it together with NGINX for serving websites and applications. Note that both software can be deployed as an API Gateway, I will explain more on this in a little bit.

NGINX open source supports for integrating ModSecurity Web Application Firewall(WAF)

Security is paramount in any IT environment. One of the key security implementation tools to deploy in a modern web-based application stack is a web application firewall (or WAF in short).

ModSecurity is the most popular open-source cross-platform WAF out there. Developed by Trustwave’s SpiderLabs, it works out-of-the-box with Apache, IIS, and NGINX web servers. ModSecurity allows for HTTP traffic monitoring, logging, and real-time analysis thus protecting web applications from a range of attacks.

Unfortunately, the HAProxy community edition does not support ModSecurity, but NGINX does. Because of this, I can not use HAProxy without NGINX.

Both can be deployed as an API Gateway

An API gateway is a go-between or intermediary server that provides a unified, consistent access point for numerous APIs, regardless of how they are implemented or deployed at the backend. It receives requests from clients (internal or external), forwards to backend API endpoints, and forwards the response from the API service to the client.

An API gateway handles several aspects of API service management such as request routing, authentication, and authorization, connection queuing, load balancing, rate limiting and throttling, logging and monitoring, circuit-breaking, and overall security. Besides, it can handle API version management and documentation.

If your frontend applications are separated from backend API services, then you need an API gateway to forward requests and responses between the two application layers. Both NGINX and HAProxy offer advanced HTTP processing capabilities needed for handling API traffic, thus filling the role of an API gateway extremely well.

Because I usually deploy NGINX behind HAProxy, I prefer to use the former as an API gateway than the latter. But recently, I tested HAProxy’s basic capabilities as an API gateway and it worked well, not until I realized I couldn’t use it with ModSecurity. I switched back to NGINX!

Here is a sample NGINX API gateway configuration:

upstream fg_booking_api {

zone booking_service 64k;

server 10.1.1.10:9000;

server 10.1.1.11:9000;

}

upstream fg_payments_api {

zone payments_service 64k;

server 10.1.1.12:9001;

server 10.1.1.13:9001;

}

upstream fg_receipts_api {

zone receipts_service 64k;

server 10.1.1.14:9002;

server 10.1.1.15:9002;

}

upstream fg_reports_api {

zone reports_service 64k;

server 10.1.1.16:9003;

server 10.1.1.17:9003;

}

server {

listen 8080;

server_name api.fossguidesbooking.app;

access_log /var/log/nginx/api_access.log custom;

location /api/v2/ {

# Policy configuration here (authentication, rate limiting, logging...)

#

access_log /var/log/nginx/fg_bookingapp_api.log custom;

# URI routing

#

location /api/v2/booking/ {

proxy_pass http://fg_booking_api;

}

location /api/v2/payments {

proxy_pass http://fg_payments_api;

}

location /api/v2/receipts {

proxy_pass http://fg_receipts_api;

}

location /api/v2/reports {

proxy_pass http://fg_reports_api;

}

return 404; # Catch-all

}

# Error responses

proxy_intercept_errors on; # Do not send backend errors to client

default_type application/json; # If no content-type, assume JSON

}

Final remarks

In this post, I shared the key reasons why I always use HAProxy community edition and NGINX open source, in production IT environments to serve simple websites, robust web applications, and APIs. I prefer to deploy HAProxy in front of NGINX and use the latter as a web server, reverse proxy, API gateway, etc, though it also handles load balancing very well.

If SSL termination in NGINX could be as simple as it is in HAProxy, I would only use it. What is your experience with the two software? How do you usually use them? Let me know in the comments.

References:

- 1. https://nginx.org/

- 2. https://www.haproxy.org/

- 3. https://www.haproxy.com/blog/haproxy-ssl-termination

- 4. https://docs.nginx.com/nginx/admin-guide/web-server/

- 5. https://github.com/SpiderLabs/ModSecurity

- 6. https://www.nginx.com/blog/deploying-nginx-plus-as-an-api-gateway-part-1/

- 7. https://www.haproxy.com/blog/using-haproxy-as-an-api-gateway-part-1-introduction