Are Node.js Process Managers Still Relevant with the Growing Use of Containers?

Overview:

In this post, I will discuss what a Node.js process manager is and share some popular examples. Then I will discuss containers and container orchestration. Ultimately, I will examine if containerization and container orchestration have made Node.js process managers irrelevant.

Page Contents

What is a Node.js process manager?

A Node.js process manager is a tool used to manage a Node.js application at runtime. It facilitates common administration tasks like starting, stopping, reloading, and restarting the application. It provides for clustering and high availability; in case of a crash, it aids in restarting the application processes automatically.

Additionally, a process manager also offers capabilities to adjust application settings dynamically to improve performance and monitor running processes to gain insights into runtime performance and resource consumption. Besides, it also supports resource allocation and usage control and more.

Examples of Node.js process managers

1. PM2 is a production-ready process manager for Node.js applications that keeps applications running forever. It comes with a built-in load balancer, enables you to reload application settings downtime, and helps you manage application clustering, logging, and monitoring.

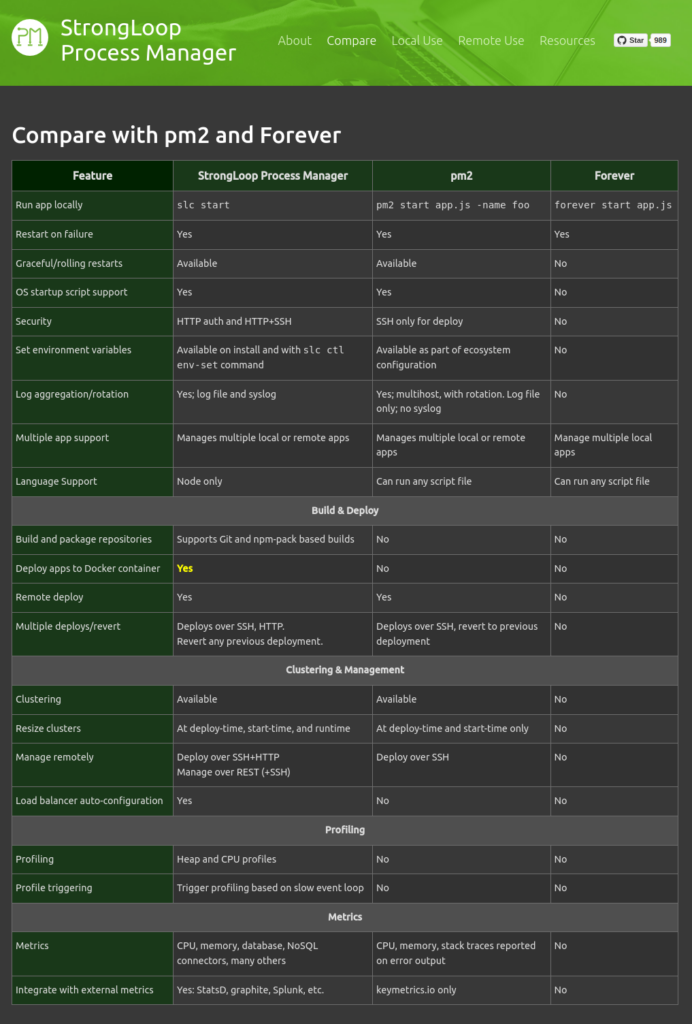

2. StrongLoop Process Manager (Strong-PM) is another production-grade process manager for Node.js applications. It also ships with built-in load balancing, monitoring, and multi-host deployment. Strong-PM provides a command-line interface(CLI) to build, package, and deploy Node.js applications to a local or remote system.

3. Forever is a simple CLI tool designed to keep scripts running continuously (forever). Because it is simple, it is intended for running smaller deployments of Node.js apps and scripts.

4. SystemD is the default process manager on most if not all modern Linux distributions. It can be configured to run a Node application as a service.

The following image shows a comparison between StroongLoop-PM, PM2, and Forever.

Source: https://strong-pm.io/compare/

When using a Node.js process manager, you have to clone or upload your application’s source code to the server that it will run on and install all dependencies and required system packages. If you intend to deploy on multiple servers for high availability, then you need to do the same on all servers.

What about using application containers such as Docker for packaging and deploying your applications in different environments. Does it make the whole process easier? Let’s find out.

What are containers?

A container is a software package that bundles up everything required to make an application run. The entire package is known as a container image. A container image is a lightweight, standalone, executable package that includes everything needed to run an application: an operating system image, system packages and libraries, the application source code, and all its dependencies and settings. When the image is running, it becomes a container.

This allows you to quickly and reliably run your application from one computing environment to another. All you need to do is provision a server with any operating system and install a container runtime like Docker in it. Then upload your container image to the server and use the docker CLI to run your container image.

I just mentioned Docker which is the most popular, but it is not the only container image format there is. We also have LXD, Cloud Native Application Bundle (CNAB) format, and many others. However, the industry is moving forward with a standard governed under the Open Container Initiative (or simply the OCI or open containers).

Examples of container engines or container runtimes

To run a container image requires a container engine or container runtime. The most popular is Docker but there are others too, such as CRI-O and LXC. A container engine takes a container image and turns it into a container (the running process).

Once a container starts running successfully, the application within it will also start running if the instructions in the image are defined correctly and the application can become accessible from the server.

If you are running numerous containers or want to set up clusters for containerized workloads within your infrastructure, you need a container orchestration tool.

Container orchestration

Container orchestration is the automation of the operational effort (such as provisioning, networking, deployment, management, and scaling) required to run containerized workloads and services without worrying about the underlying infrastructure. You can implement container orchestration anywhere containers are, allowing you to automate the life cycle management of containers.

Simply put, a container orchestration tool mainly does two things: it provides a standard way for you to define how your containers should run, based on known objects it supports. Secondly, it dynamically schedules container workloads within a cluster of computers.

Additionally, a container orchestrator also supports load-balancing, secret and configuration management, storage management, auto-deployment, auto-scaling, resource consumption management, logging, monitoring, and more.

Examples of container orchestration tools

There are several container orchestration tools or platforms out there that fall under two categories: self-managed or fully managed solutions. Self-managed container orchestration tools are built from scratch or leverage open-source platforms. They give you complete control over customization within your IT environment. This implies that have to take on the burden of managing and maintaining the platform.

Self-Managed Container Orchestration Tools

1. Kubernetes (sometimes shortened to K8s) is the most popular open-source container orchestration platform for cloud-native development. Originally developed by Google, it’s considered the de facto choice for deploying and managing containers. It also works fine on-premises IT infrastructure.

2. Docker Swarm mode is a built-in advanced feature in Docker for natively managing a cluster of Docker Engines called a swarm. You use the Docker CLI to create a swarm, deploy application services to a swarm, and manage swarm behavior.

3. Rancher is a complete software stack that addresses the operational and security challenges of managing multiple Kubernetes clusters while providing DevOps teams with integrated tools for running containerized workloads.

4. Red Hat OpenShift is a leading hybrid cloud application platform powered by Kubernetes that delivers a consistent experience across public cloud, on-premise, hybrid cloud, or edge architecture.

5. HashCorp Nomad is a simple and flexible scheduler and orchestrator to deploy and manage containers and non-containerized applications across on-premises and clouds at scale.

6. Apache Mesos is a distributed systems kernel that is built using the same principles as the Linux kernel, only at a different level of abstraction. It offers native support for launching containers with Docker and AppC image formats.

Managed Container Orchestration Services

The other option is to use a fully managed solution or a Containers as a Service (CaaS) offering from a cloud provider, such as Google (e.g Google Kubernetes Engine (GKE)), Amazon (e.g Amazon Elastic Kubernetes Service), Microsoft (e.g Azure Kubernetes Service (AKS)), or IBM (e.g IBM Cloud Kubernetes Service).

With managed container orchestration platforms or CaaS, there is no heavy lifting for you, the cloud provider is responsible for managing installation and operations. All you need to do is consume the solution capabilities and focus on running your containerized applications.

Final words: My experience and opinion

Before I learned containerization using Docker and container orchestration (initially with Docker Swarm but now with Kubernetes), I relied on process managers like PM2 to run Node.js applications.

By then, to enable high availability, I had to set up several servers running the same application. To update the application, I would log into all the servers and run commands to pull the latest changes from Github and Gitlab locally and restart PM2. Later, I used Ansible to manage all the servers which eased the whole process.

After learning Docker, building CI pipelines, and container orchestration tools like Docker Swarm and eventually Kubernetes, I realized how much easier these tools made it for my team and me to deploy and run our Node.js applications in production. I had to abandon the use of PM2.

With all the benefits of containerization and container orchestration tools, Node.js process managers now have little room for running and managing modern applications in production environments whether in cloud or on-prem.

However, today, many developers and system administrators do have not any practical knowledge and experience of containerization and container orchestration tools. To run their Node.js applications in production, they basically rely on tools like the above-listed process managers. I think Node.js process managers are still relevant and widely used but only among those who lack the practical knowledge of containerization technologies.

What is your say? Let me know in the comments section.

References:

1. https://expressjs.com/en/advanced/pm.html

2. https://www.docker.com/resources/what-container/

3. https://developers.redhat.com/blog/2018/02/22/container-terminology-practical-introduction

4. https://aws.amazon.com/what-is/containerization/

5. https://opencontainers.org/

6. https://cloud.google.com/discover/what-is-container-orchestration

5. https://devopscube.com/docker-container-clustering-tools/