How To Deploy HAProxy as a Load Balancer for NGINX on Linux Servers

Overview:

This guide describes deploying HAProxy as a load balancer for NGINX on Linux servers.

Page Contents

Foreword

HAProxy is a free, open-source, reliable, high-performance HTTP/TCP load balancer with a straightforward SSL termination implementation. NGINX is a free and open-source web server that can also be used as a reverse proxy, load balancer, mail proxy, HTTP cache, and application accelerator.

In a previous post, I shared key reasons why I always use HAProxy together with NGINX in production, even though the latter can be deployed alone and still offers reliable performance.

To follow this guide, ensure that you have installed HAProxy and NGINX on your Linux server(s):

Once you have HAProxy and NGINX packages installed on your Linux server(s), you can proceed to configure them as follows.

Configure NGINX as a Web Server: Reverse Proxy and Load Balancer for Web Applications

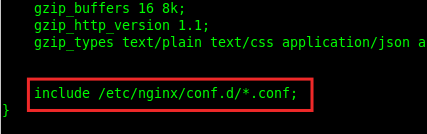

NGINX’s configurations are by default stored in the /etc/nginx directory. Its default configuration file is /etc/nginx/nginx.conf and you can create other configuration files in the /etc/nginx/conf.d or other custom subdirectories, use the include directive to add them to the main configuration. For example, you can create all your server blocks for serving web applications in the /etc/nginx/conf.d subdirectory.

The following is an example of NGINX configuration for serving a React.js web application with a Nodejs backend. The NGINX service is running on a server with IP address 10.10.1.4(which will be used in the HAProxy configuration).

It shows how to serve static React files using the first location directive( location /) and the root directive and proxy API requests to the Nodejs service using the second location directive (location /api) with the proxy_pass directive. Because the same API service is running on two servers (10.10.1.20:9090 and 10.10.1.30:9090), the requests going to it are load-balanced within the upstream block.

#ct /etc/nginx/conf.d/ tickets.fg.app.conf

upstream fg-tickets-api {

server 10.10.1.20:9090;

server 10.10.1.30:9090;

}

server {

listen 8080;

server_name tickets.fg.app;

root /var/www/frontends/fg-tickets-client/build;

index index.html;

client_max_body_size 10M;

access_log /var/log/nginx/tickets.fg.app_access.log;

error_log /var/log/nginx/tickets.fg.app_error.log;

location / {

try_files $uri $uri/ /index.html =404 =403 =500;

}

location /api {

proxy_pass http://fg-tickets-api;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# Following is necessary for Websocket support

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location /assets {

proxy_pass http://femis-backend;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# Following is necessary for Websocket support

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

}

The next configuration shows how to use NGINX to serve a WordPress site, running on another server, 10.10.1.5. The first section defines a content cache, and the next is a server block, within it, there are directives to enable “W3TC Browser Cache” and the location directive (location ~ \.php$) enables processing of PHP requests passed to the PHP-FPM (a FastCGI server).

#cat /etc/nginx/conf.d/mywpsite.com.conf

fastcgi_cache_path /var/log/nginx/cache/ levels=1:2 keys_zone=wpcache:200m max_size=10g inactive=2h use_temp_path=off;

fastcgi_cache_key "$scheme$request_method$host$request_uri";

fastcgi_ignore_headers Cache-Control Expires Set-Cookie;

server {

listen 80;

root /web/www/mywpsite.com;

index index.php index.html index.htm;

server_name mywpsite.com www.mywpsite.com;

access_log /var/log/nginx/fossguides.com_access.log;

error_log /var/log/nginx/fossguides.com_error.log;

client_max_body_size 64M;

# BEGIN W3TC Browser Cache

gzip on;

gzip_types text/css text/x-component application/x-javascript application/javascript text/javascript text/x-js text/richtext text/plain text/xsd text/xsl text/xml image/bmp application/java application/msword application/vnd.ms-fontobject application/x-msdownload image/x-icon application/json application/vnd.ms-access video/webm application/vnd.ms-project application/x-font-otf application/vnd.ms-opentype application/vnd.oasis.opendocument.database application/vnd.oasis.opendocument.chart application/vnd.oasis.opendocument.formula application/vnd.oasis.opendocument.graphics application/vnd.oasis.opendocument.spreadsheet application/vnd.oasis.opendocument.text audio/ogg application/pdf application/vnd.ms-powerpoint image/svg+xml application/x-shockwave-flash image/tiff application/x-font-ttf audio/wav application/vnd.ms-write application/font-woff application/font-woff2 application/vnd.ms-excel;

location ~ \.(css|htc|less|js|js2|js3|js4)$ {

expires 31536000s;

etag on;

if_modified_since exact;

try_files $uri $uri/ /index.php?$args;

}

location ~ \.(html|htm|rtf|rtx|txt|xsd|xsl|xml)$ {

etag on;

if_modified_since exact;

try_files $uri $uri/ /index.php?$args;

}

location ~ \.(asf|asx|wax|wmv|wmx|avi|avif|avifs|bmp|class|divx|doc|docx|exe|gif|gz|gzip|ico|jpg|jpeg|jpe|webp|json|mdb|mid|midi|mov|qt|mp3|m4a|mp4|m4v|mpeg|mpg|mpe|webm|mpp|_otf|odb|odc|odf|odg|odp|ods|odt|ogg|ogv|pdf|png|pot|pps|ppt|pptx|ra|ram|svg|svgz|swf|tar|tif|tiff|_ttf|wav|wma|wri|xla|xls|xlsx|xlt|xlw|zip)$ {

expires 31536000s;

etag on;

if_modified_since exact;

try_files $uri $uri/ /index.php?$args;

}

add_header Referrer-Policy "no-referrer-when-downgrade";

# END W3TC Browser Cache

#cache_settings

set $skip_cache 0;

if ($request_method = POST) {

set $skip_cache 1;

}

if ($query_string != "") {

set $skip_cache 1;

}

if ($request_uri ~* "/wp-admin/|/xmlrpc.php|wp-.*.php|^/feed/*|/tag/.*/feed/*|index.php|/.*sitemap.*\.(xml|xsl)") {

set $skip_cache 1;

}

if ($http_cookie ~* "comment_author|wordpress_[a-f0-9]+|wp-postpass|wordpress_no_cache|wordpress_logged_in") {

set $skip_cache 1;

}

location / {

try_files $uri $uri/ /index.php?$args;

}

location ~ \.php$ {

try_files $uri =404;

include /etc/nginx/fastcgi_params;

fastcgi_read_timeout 3600s;

fastcgi_buffer_size 128k;

fastcgi_buffers 4 128k;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_pass unix:/run/php/php7.3-fpm.sock;

fastcgi_index index.php;

#cache_setting

fastcgi_cache wpcache;

fastcgi_cache_valid 200 301 302 2h;

fastcgi_cache_use_stale error timeout updating invalid_header http_500 http_503;

fastcgi_cache_min_uses 1;

fastcgi_cache_lock on;

fastcgi_cache_bypass $skip_cache;

fastcgi_no_cache $skip_cache;

add_header X-FastCGI-Cache $upstream_cache_status;

}

location ~ /\.ht {

deny all;

}

}

I have many other NGINX deployments on servers with IP addresses 10.10.1.6, 10.10.1.7, 10.20.1.4, 10.20.1.5, and 10.20.1.6 serving the same applications.

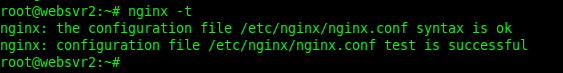

After configuring NGINX and saving the configuration files, it’s always a good idea to check the configuration syntax for correctness, using the following command:

$sudo nginx -t OR #nginx -t

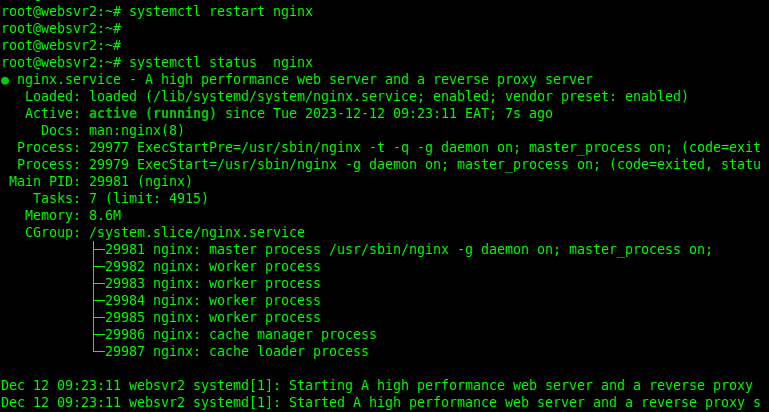

Then restart the NGINX service and check its status to ensure that it is up and running, using the following systemctl commands:

#systemctl restart nginx #systemctl status nginx